Decorators

Decorators are an upcoming ECMAScript feature that allow us to customize classes and their members in a reusable way.

Let’s consider the following code:

ts

greet is pretty simple here, but let’s imagine it’s something way more complicated - maybe it does some async logic, it’s recursive, it has side effects, etc.

Regardless of what kind of ball-of-mud you’re imagining, let’s say you throw in some console.log calls to help debug greet.

ts

This pattern is fairly common. It sure would be nice if there was a way we could do this for every method!

This is where decorators come in.

We can write a function called loggedMethod that looks like the following:

ts

“What’s the deal with all of these anys?

What is this, anyScript!?”

Just be patient - we’re keeping things simple for now so that we can focus on what this function is doing.

Notice that loggedMethod takes the original method (originalMethod) and returns a function that

- logs an “Entering…” message

- passes along

thisand all of its arguments to the original method - logs an “Exiting…” message, and

- returns whatever the original method returned.

Now we can use loggedMethod to decorate the method greet:

ts

We just used loggedMethod as a decorator above greet - and notice that we wrote it as @loggedMethod.

When we did that, it got called with the method target and a context object.

Because loggedMethod returned a new function, that function replaced the original definition of greet.

We didn’t mention it yet, but loggedMethod was defined with a second parameter.

It’s called a “context object”, and it has some useful information about how the decorated method was declared - like whether it was a #private member, or static, or what the name of the method was.

Let’s rewrite loggedMethod to take advantage of that and print out the name of the method that was decorated.

ts

We’re now using the context parameter - and that it’s the first thing in loggedMethod that has a type stricter than any and any[].

TypeScript provides a type called ClassMethodDecoratorContext that models the context object that method decorators take.

Apart from metadata, the context object for methods also has a useful function called addInitializer.

It’s a way to hook into the beginning of the constructor (or the initialization of the class itself if we’re working with statics).

As an example - in JavaScript, it’s common to write something like the following pattern:

ts

Alternatively, greet might be declared as a property initialized to an arrow function.

ts

This code is written to ensure that this isn’t re-bound if greet is called as a stand-alone function or passed as a callback.

ts

We can write a decorator that uses addInitializer to call bind in the constructor for us.

ts

bound isn’t returning anything - so when it decorates a method, it leaves the original alone.

Instead, it will add logic before any other fields are initialized.

ts

Notice that we stacked two decorators - @bound and @loggedMethod.

These decorations run in “reverse order”.

That is, @loggedMethod decorates the original method greet, and @bound decorates the result of @loggedMethod.

In this example, it doesn’t matter - but it could if your decorators have side-effects or expect a certain order.

Also worth noting - if you’d prefer stylistically, you can put these decorators on the same line.

ts

Something that might not be obvious is that we can even make functions that return decorator functions.

That makes it possible to customize the final decorator just a little.

If we wanted, we could have made loggedMethod return a decorator and customize how it logs its messages.

ts

If we did that, we’d have to call loggedMethod before using it as a decorator.

We could then pass in any string as the prefix for messages that get logged to the console.

ts

Decorators can be used on more than just methods! They can be used on properties/fields, getters, setters, and auto-accessors. Even classes themselves can be decorated for things like subclassing and registration.

To learn more about decorators in-depth, you can read up on Axel Rauschmayer’s extensive summary.

For more information about the changes involved, you can view the original pull request.

Differences with Experimental Legacy Decorators

If you’ve been using TypeScript for a while, you might be aware of the fact that it’s had support for “experimental” decorators for years.

While these experimental decorators have been incredibly useful, they modeled a much older version of the decorators proposal, and always required an opt-in compiler flag called --experimentalDecorators.

Any attempt to use decorators in TypeScript without this flag used to prompt an error message.

--experimentalDecorators will continue to exist for the foreseeable future;

however, without the flag, decorators will now be valid syntax for all new code.

Outside of --experimentalDecorators, they will be type-checked and emitted differently.

The type-checking rules and emit are sufficiently different that while decorators can be written to support both the old and new decorators behavior, any existing decorator functions are not likely to do so.

This new decorators proposal is not compatible with --emitDecoratorMetadata, and it does not allow decorating parameters.

Future ECMAScript proposals may be able to help bridge that gap.

On a final note: in addition to allowing decorators to be placed before the export keyword, the proposal for decorators now provides the option of placing decorators after export or export default.

The only exception is that mixing the two styles is not allowed.

js

Writing Well-Typed Decorators

The loggedMethod and bound decorator examples above are intentionally simple and omit lots of details about types.

Typing decorators can be fairly complex.

For example, a well-typed version of loggedMethod from above might look something like this:

ts

We had to separately model out the type of this, the parameters, and the return type of the original method, using the type parameters This, Args, and Return.

Exactly how complex your decorators functions are defined depends on what you want to guarantee. Just keep in mind, your decorators will be used more than they’re written, so a well-typed version will usually be preferable - but there’s clearly a trade-off with readability, so try to keep things simple.

More documentation on writing decorators will be available in the future - but this post should have a good amount of detail for the mechanics of decorators.

const Type Parameters

When inferring the type of an object, TypeScript will usually choose a type that’s meant to be general.

For example, in this case, the inferred type of names is string[]:

ts

Usually the intent of this is to enable mutation down the line.

However, depending on what exactly getNamesExactly does and how it’s intended to be used, it can often be the case that a more-specific type is desired.

Up until now, API authors have typically had to recommend adding as const in certain places to achieve the desired inference:

ts

This can be cumbersome and easy to forget.

In TypeScript 5.0, you can now add a const modifier to a type parameter declaration to cause const-like inference to be the default:

ts

Note that the const modifier doesn’t reject mutable values, and doesn’t require immutable constraints.

Using a mutable type constraint might give surprising results.

For example:

ts

Here, the inferred candidate for T is readonly ["a", "b", "c"], and a readonly array can’t be used where a mutable one is needed.

In this case, inference falls back to the constraint, the array is treated as string[], and the call still proceeds successfully.

A better definition of this function should use readonly string[]:

ts

Similarly, remember to keep in mind that the const modifier only affects inference of object, array and primitive expressions that were written within the call, so arguments which wouldn’t (or couldn’t) be modified with as const won’t see any change in behavior:

ts

See the pull request and the (first and second second) motivating issues for more details.

Supporting Multiple Configuration Files in extends

When managing multiple projects, it can be helpful to have a “base” configuration file that other tsconfig.json files can extend from.

That’s why TypeScript supports an extends field for copying over fields from compilerOptions.

jsonc

However, there are scenarios where you might want to extend from multiple configuration files.

For example, imagine using a TypeScript base configuration file shipped to npm.

If you want all your projects to also use the options from the @tsconfig/strictest package on npm, then there’s a simple solution: have tsconfig.base.json extend from @tsconfig/strictest:

jsonc

This works to a point.

If you have any projects that don’t want to use @tsconfig/strictest, they have to either manually disable the options, or create a separate version of tsconfig.base.json that doesn’t extend from @tsconfig/strictest.

To give some more flexibility here, Typescript 5.0 now allows the extends field to take multiple entries.

For example, in this configuration file:

jsonc

Writing this is kind of like extending c directly, where c extends b, and b extends a.

If any fields “conflict”, the latter entry wins.

So in the following example, both strictNullChecks and noImplicitAny are enabled in the final tsconfig.json.

jsonc

As another example, we can rewrite our original example in the following way.

jsonc

For more details, read more on the original pull request.

All enums Are Union enums

When TypeScript originally introduced enums, they were nothing more than a set of numeric constants with the same type.

ts

The only thing special about E.Foo and E.Bar was that they were assignable to anything expecting the type E.

Other than that, they were pretty much just numbers.

ts

It wasn’t until TypeScript 2.0 introduced enum literal types that enums got a bit more special. Enum literal types gave each enum member its own type, and turned the enum itself into a union of each member type. They also allowed us to refer to only a subset of the types of an enum, and to narrow away those types.

ts

One issue with giving each enum member its own type was that those types were in some part associated with the actual value of the member. In some cases it’s not possible to compute that value - for instance, an enum member could be initialized by a function call.

ts

Whenever TypeScript ran into these issues, it would quietly back out and use the old enum strategy. That meant giving up all the advantages of unions and literal types.

TypeScript 5.0 manages to make all enums into union enums by creating a unique type for each computed member. That means that all enums can now be narrowed and have their members referenced as types as well.

For more details on this change, you can read the specifics on GitHub.

--moduleResolution bundler

TypeScript 4.7 introduced the node16 and nodenext options for its --module and --moduleResolution settings.

The intent of these options was to better model the precise lookup rules for ECMAScript modules in Node.js;

however, this mode has many restrictions that other tools don’t really enforce.

For example, in an ECMAScript module in Node.js, any relative import needs to include a file extension.

js

There are certain reasons for this in Node.js and the browser - it makes file lookups faster and works better for naive file servers.

But for many developers using tools like bundlers, the node16/nodenext settings were cumbersome because bundlers don’t have most of these restrictions.

In some ways, the node resolution mode was better for anyone using a bundler.

But in some ways, the original node resolution mode was already out of date.

Most modern bundlers use a fusion of the ECMAScript module and CommonJS lookup rules in Node.js.

For example, extensionless imports work just fine just like in CommonJS, but when looking through the export conditions of a package, they’ll prefer an import condition just like in an ECMAScript file.

To model how bundlers work, TypeScript now introduces a new strategy: --moduleResolution bundler.

jsonc

If you are using a modern bundler like Vite, esbuild, swc, Webpack, Parcel, and others that implement a hybrid lookup strategy, the new bundler option should be a good fit for you.

On the other hand, if you’re writing a library that’s meant to be published on npm, using the bundler option can hide compatibility issues that may arise for your users who aren’t using a bundler.

So in these cases, using the node16 or nodenext resolution options is likely to be a better path.

To read more on --moduleResolution bundler, take a look at the implementing pull request.

Resolution Customization Flags

JavaScript tooling may now model “hybrid” resolution rules, like in the bundler mode we described above.

Because tools may differ in their support slightly, TypeScript 5.0 provides ways to enable or disable a few features that may or may not work with your configuration.

allowImportingTsExtensions

--allowImportingTsExtensions allows TypeScript files to import each other with a TypeScript-specific extension like .ts, .mts, or .tsx.

This flag is only allowed when --noEmit or --emitDeclarationOnly is enabled, since these import paths would not be resolvable at runtime in JavaScript output files.

The expectation here is that your resolver (e.g. your bundler, a runtime, or some other tool) is going to make these imports between .ts files work.

resolvePackageJsonExports

--resolvePackageJsonExports forces TypeScript to consult the exports field of package.json files if it ever reads from a package in node_modules.

This option defaults to true under the node16, nodenext, and bundler options for --moduleResolution.

resolvePackageJsonImports

--resolvePackageJsonImports forces TypeScript to consult the imports field of package.json files when performing a lookup that starts with # from a file whose ancestor directory contains a package.json.

This option defaults to true under the node16, nodenext, and bundler options for --moduleResolution.

allowArbitraryExtensions

In TypeScript 5.0, when an import path ends in an extension that isn’t a known JavaScript or TypeScript file extension, the compiler will look for a declaration file for that path in the form of {file basename}.d.{extension}.ts.

For example, if you are using a CSS loader in a bundler project, you might want to write (or generate) declaration files for those stylesheets:

css

ts

ts

By default, this import will raise an error to let you know that TypeScript doesn’t understand this file type and your runtime might not support importing it.

But if you’ve configured your runtime or bundler to handle it, you can suppress the error with the new --allowArbitraryExtensions compiler option.

Note that historically, a similar effect has often been achievable by adding a declaration file named app.css.d.ts instead of app.d.css.ts - however, this just worked through Node’s require resolution rules for CommonJS.

Strictly speaking, the former is interpreted as a declaration file for a JavaScript file named app.css.js.

Because relative files imports need to include extensions in Node’s ESM support, TypeScript would error on our example in an ESM file under --moduleResolution node16 or nodenext.

For more information, read up the proposalfor this feature and its corresponding pull request.

customConditions

--customConditions takes a list of additional conditions that should succeed when TypeScript resolves from an exports or imports field of a package.json.

These conditions are added to whatever existing conditions a resolver will use by default.

For example, when this field is set in a tsconfig.json as so:

jsonc

Any time an exports or imports field is referenced in package.json, TypeScript will consider conditions called my-condition.

So when importing from a package with the following package.json

jsonc

TypeScript will try to look for files corresponding to foo.mjs.

This field is only valid under the node16, nodenext, and bundler options for --moduleResolution

--verbatimModuleSyntax

By default, TypeScript does something called import elision. Basically, if you write something like

ts

TypeScript detects that you’re only using an import for types and drops the import entirely. Your output JavaScript might look something like this:

js

Most of the time this is good, because if Car isn’t a value that’s exported from ./car, we’ll get a runtime error.

But it does add a layer of complexity for certain edge cases.

For example, notice there’s no statement like import "./car"; - the import was dropped entirely.

That actually makes a difference for modules that have side-effects or not.

TypeScript’s emit strategy for JavaScript also has another few layers of complexity - import elision isn’t always just driven by how an import is used - it often consults how a value is declared as well. So it’s not always clear whether code like the following

ts

should be preserved or dropped.

If Car is declared with something like a class, then it can be preserved in the resulting JavaScript file.

But if Car is only declared as a type alias or interface, then the JavaScript file shouldn’t export Car at all.

While TypeScript might be able to make these emit decisions based on information from across files, not every compiler can.

The type modifier on imports and exports helps with these situations a bit.

We can make it explicit whether an import or export is only being used for type analysis, and can be dropped entirely in JavaScript files by using the type modifier.

ts

type modifiers are not quite useful on their own - by default, module elision will still drop imports, and nothing forces you to make the distinction between type and plain imports and exports.

So TypeScript has the flag --importsNotUsedAsValues to make sure you use the type modifier, --preserveValueImports to prevent some module elision behavior, and --isolatedModules to make sure that your TypeScript code works across different compilers.

Unfortunately, understanding the fine details of those 3 flags is hard, and there are still some edge cases with unexpected behavior.

TypeScript 5.0 introduces a new option called --verbatimModuleSyntax to simplify the situation.

The rules are much simpler - any imports or exports without a type modifier are left around.

Anything that uses the type modifier is dropped entirely.

ts

With this new option, what you see is what you get.

That does have some implications when it comes to module interop though.

Under this flag, ECMAScript imports and exports won’t be rewritten to require calls when your settings or file extension implied a different module system.

Instead, you’ll get an error.

If you need to emit code that uses require and module.exports, you’ll have to use TypeScript’s module syntax that predates ES2015:

| Input TypeScript | Output JavaScript |

|---|---|

|

|

|

|

While this is a limitation, it does help make some issues more obvious.

For example, it’s very common to forget to set the type field in package.json under --module node16.

As a result, developers would start writing CommonJS modules instead of an ES modules without realizing it, giving surprising lookup rules and JavaScript output.

This new flag ensures that you’re intentional about the file type you’re using because the syntax is intentionally different.

Because --verbatimModuleSyntax provides a more consistent story than --importsNotUsedAsValues and --preserveValueImports, those two existing flags are being deprecated in its favor.

For more details, read up on [the original pull request]https://github.com/microsoft/TypeScript/pull/52203 and its proposal issue.

Support for export type *

When TypeScript 3.8 introduced type-only imports, the new syntax wasn’t allowed on export * from "module" or export * as ns from "module" re-exports. TypeScript 5.0 adds support for both of these forms:

ts

You can read more about the implementation here.

@satisfies Support in JSDoc

TypeScript 4.9 introduced the satisfies operator.

It made sure that the type of an expression was compatible, without affecting the type itself.

For example, let’s take the following code:

ts

Here, TypeScript knows that myConfigSettings.extends was declared with an array - because while satisfies validated the type of our object, it didn’t bluntly change it to CompilerOptions and lose information.

So if we want to map over extends, that’s fine.

ts

This was helpful for TypeScript users, but plenty of people use TypeScript to type-check their JavaScript code using JSDoc annotations.

That’s why TypeScript 5.0 is supporting a new JSDoc tag called @satisfies that does exactly the same thing.

/** @satisfies */ can catch type mismatches:

js

But it will preserve the original type of our expressions, allowing us to use our values more precisely later on in our code.

js

/** @satisfies */ can also be used inline on any parenthesized expression.

We could have written myCompilerOptions like this:

ts

Why? Well, it usually makes more sense when you’re deeper in some other code, like a function call.

js

This feature was provided thanks to Oleksandr Tarasiuk!

@overload Support in JSDoc

In TypeScript, you can specify overloads for a function. Overloads give us a way to say that a function can be called with different arguments, and possibly return different results. They can restrict how callers can actually use our functions, and refine what results they’ll get back.

ts

Here, we’ve said that printValue takes either a string or a number as its first argument.

If it takes a number, it can take a second argument to determine how many fractional digits we can print.

TypeScript 5.0 now allows JSDoc to declare overloads with a new @overload tag.

Each JSDoc comment with an @overload tag is treated as a distinct overload for the following function declaration.

js

Now regardless of whether we’re writing in a TypeScript or JavaScript file, TypeScript can let us know if we’ve called our functions incorrectly.

ts

This new tag was implemented thanks to Tomasz Lenarcik.

Passing Emit-Specific Flags Under --build

TypeScript now allows the following flags to be passed under --build mode

--declaration--emitDeclarationOnly--declarationMap--sourceMap--inlineSourceMap

This makes it way easier to customize certain parts of a build where you might have different development and production builds.

For example, a development build of a library might not need to produce declaration files, but a production build would. A project can configure declaration emit to be off by default and simply be built with

sh

Once you’re done iterating in the inner loop, a “production” build can just pass the --declaration flag.

sh

More information on this change is available here.

Case-Insensitive Import Sorting in Editors

In editors like Visual Studio and VS Code, TypeScript powers the experience for organizing and sorting imports and exports. Often though, there can be different interpretations of when a list is “sorted”.

For example, is the following import list sorted?

ts

The answer might surprisingly be “it depends”.

If we don’t care about case-sensitivity, then this list is clearly not sorted.

The letter f comes before both t and T.

But in most programming languages, sorting defaults to comparing the byte values of strings.

The way JavaScript compares strings means that "Toggle" always comes before "freeze" because according to the ASCII character encoding, uppercase letters come before lowercase.

So from that perspective, the import list is sorted.

TypeScript previously considered the import list to be sorted because it was doing a basic case-sensitive sort. This could be a point of frustration for developers who preferred a case-insensitive ordering, or who used tools like ESLint which require to case-insensitive ordering by default.

TypeScript now detects case sensitivity by default. This means that TypeScript and tools like ESLint typically won’t “fight” each other over how to best sort imports.

Our team has also been experimenting with further sorting strategies which you can read about here.

These options may eventually be configurable by editors.

For now, they are still unstable and experimental, and you can opt into them in VS Code today by using the typescript.unstable entry in your JSON options.

Below are all of the options you can try out (set to their defaults):

jsonc

You can read more details on the original work for auto-detecting and specifying case-insensitivity, followed by the the broader set of options.

Exhaustive switch/case Completions

When writing a switch statement, TypeScript now detects when the value being checked has a literal type.

If so, it will offer a completion that scaffolds out each uncovered case.

You can see specifics of the implementation on GitHub.

Speed, Memory, and Package Size Optimizations

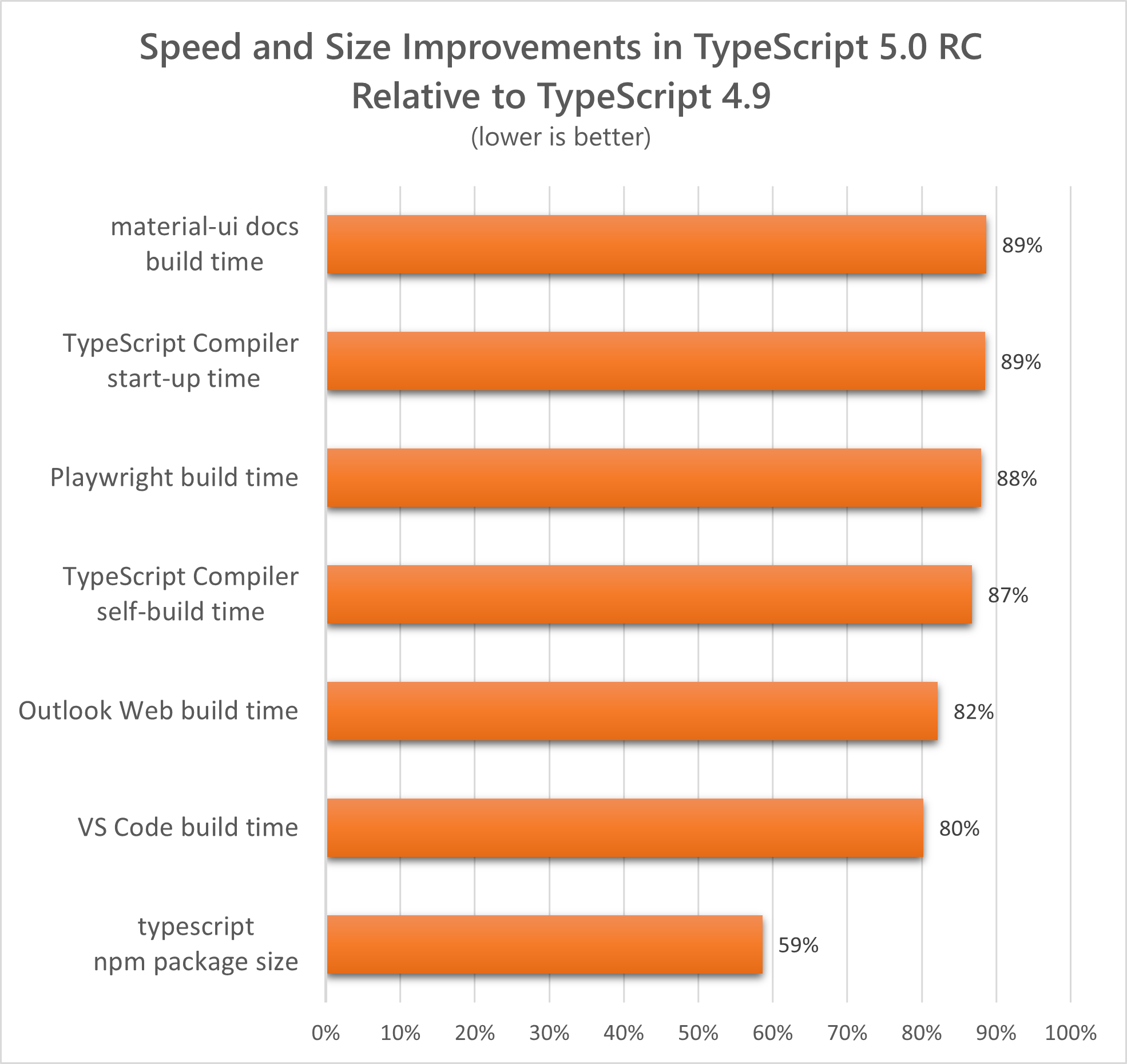

TypeScript 5.0 contains lots of powerful changes across our code structure, our data structures, and algorithmic implementations. What these all mean is that your entire experience should be faster - not just running TypeScript, but even installing it.

Here are a few interesting wins in speed and size that we’ve been able to capture relative to TypeScript 4.9.

| Scenario | Time or Size Relative to TS 4.9 |

|---|---|

| material-ui build time | 89% |

| TypeScript Compiler startup time | 89% |

| Playwright build time | 88% |

| TypeScript Compiler self-build time | 87% |

| Outlook Web build time | 82% |

| VS Code build time | 80% |

| typescript npm Package Size | 59% |

How? There are a few notable improvements we’d like give more details on in the future. But we won’t make you wait for that blog post.

First off, we recently migrated TypeScript from namespaces to modules, allowing us to leverage modern build tooling that can perform optimizations like scope hoisting. Using this tooling, revisiting our packaging strategy, and removing some deprecated code has shaved off about 26.4 MB from TypeScript 4.9’s 63.8 MB package size. It also brought us a notable speed-up through direct function calls.

TypeScript also added more uniformity to internal object types within the compiler, and also slimmed the data stored on some of these object types as well. This reduced polymorphic and megamorphic use sites, while offsetting most of the necessary memory consumption that was necessary for uniform shapes.

We’ve also performed some caching when serializing information to strings. Type display, which can happen as part of error reporting, declaration emit, code completions, and more, can end up being fairly expensive. TypeScript now caches some commonly used machinery to reuse across these operations.

Another notable change we made that improved our parser was leveraging var to occasionally side-step the cost of using let and const across closures.

This improved some of our parsing performance.

Overall, we expect most codebases should see speed improvements from TypeScript 5.0, and have consistently been able to reproduce wins between 10% to 20%. Of course this will depend on hardware and codebase characteristics, but we encourage you to try it out on your codebase today!

For more information, see some of our notable optimizations:

- Migrate to Modules

NodeMonomorphizationSymbolMonomorphizationIdentifierSize ReductionPrinterCaching- Limited Usage of

var

Breaking Changes and Deprecations

Runtime Requirements

TypeScript now targets ECMAScript 2018. For Node users, that means a minimum version requirement of at least Node.js 10 and later.

lib.d.ts Changes

Changes to how types for the DOM are generated might have an impact on existing code.

Notably, certain properties have been converted from number to numeric literal types, and properties and methods for cut, copy, and paste event handling have been moved across interfaces.

API Breaking Changes

In TypeScript 5.0, we moved to modules, removed some unnecessary interfaces, and made some correctness improvements. For more details on what’s changed, see our API Breaking Changes page.

Forbidden Implicit Coercions in Relational Operators

Certain operations in TypeScript will already warn you if you write code which may cause an implicit string-to-number coercion:

ts

In 5.0, this will also be applied to the relational operators >, <, <=, and >=:

ts

To allow this if desired, you can explicitly coerce the operand to a number using +:

ts

This correctness improvement was contributed courtesy of Mateusz Burzyński.

Enum Overhaul

TypeScript has had some long-standing oddities around enums ever since its first release.

In 5.0, we’re cleaning up some of these problems, as well as reducing the concept count needed to understand the various kinds of enums you can declare.

There are two main new errors you might see as part of this.

The first is that assigning an out-of-domain literal to an enum type will now error as one might expect:

ts

The other is that declaration of certain kinds of indirected mixed string/number enum forms would, incorrectly, create an all-number enum:

ts

You can see more details in relevant change.

More Accurate Type-Checking for Parameter Decorators in Constructors Under --experimentalDecorators

TypeScript 5.0 makes type-checking more accurate for decorators under --experimentalDecorators.

One place where this becomes apparent is when using a decorator on a constructor parameter.

ts

This call will fail because key expects a string | symbol, but constructor parameters receive a key of undefined.

The correct fix is to change the type of key within inject.

A reasonable workaround if you’re using a library that can’t be upgraded is is to wrap inject in a more type-safe decorator function, and use a type-assertion on key.

For more details, see this issue.

Deprecations and Default Changes

In TypeScript 5.0, we’ve deprecated the following settings and setting values:

--target: ES3--out--noImplicitUseStrict--keyofStringsOnly--suppressExcessPropertyErrors--suppressImplicitAnyIndexErrors--noStrictGenericChecks--charset--importsNotUsedAsValues--preserveValueImportsprependin project references

These configurations will continue to be allowed until TypeScript 5.5, at which point they will be removed entirely, however, you will receive a warning if you are using these settings.

In TypeScript 5.0, as well as future releases 5.1, 5.2, 5.3, and 5.4, you can specify "ignoreDeprecations": "5.0" to silence those warnings.

We’ll also shortly be releasing a 4.9 patch to allow specifying ignoreDeprecations to allow for smoother upgrades.

Aside from deprecations, we’ve changed some settings to better improve cross-platform behavior in TypeScript.

--newLine, which controls the line endings emitted in JavaScript files, used to be inferred based on the current operating system if not specified.

We think builds should be as deterministic as possible, and Windows Notepad supports line-feed line endings now, so the new default setting is LF.

The old OS-specific inference behavior is no longer available.

--forceConsistentCasingInFileNames, which ensured that all references to the same file name in a project agreed in casing, now defaults to true.

This can help catch differences issues with code written on case-insensitive file systems.

You can leave feedback and view more information on the tracking issue for 5.0 deprecations