👋 Welcome to MLC LLM¶

Machine Learning Compilation for Large Language Models (MLC LLM) is a high-performance universal deployment solution that allows native deployment of any large language models with native APIs with compiler acceleration. The mission of this project is to enable everyone to develop, optimize and deploy AI models natively on everyone’s devices with ML compilation techniques.

Getting Started¶

To begin with, try out MLC LLM support for int4-quantized Llama2 7B. It is recommended to have at least 6GB free VRAM to run it.

Install MLC Chat Python. MLC LLM is available via pip. It is always recommended to install it in an isolated conda virtual environment.

Download pre-quantized weights. The comamnds below download the int4-quantized Llama2-7B from HuggingFace:

git lfs install && mkdir -p dist/prebuilt

git clone https://huggingface.co/mlc-ai/mlc-chat-Llama-2-7b-chat-hf-q4f16_1 \

dist/prebuilt/mlc-chat-Llama-2-7b-chat-hf-q4f16_1

Download pre-compiled model library. The pre-compiled model library is available as below:

git clone https://github.com/mlc-ai/binary-mlc-llm-libs.git dist/prebuilt/lib

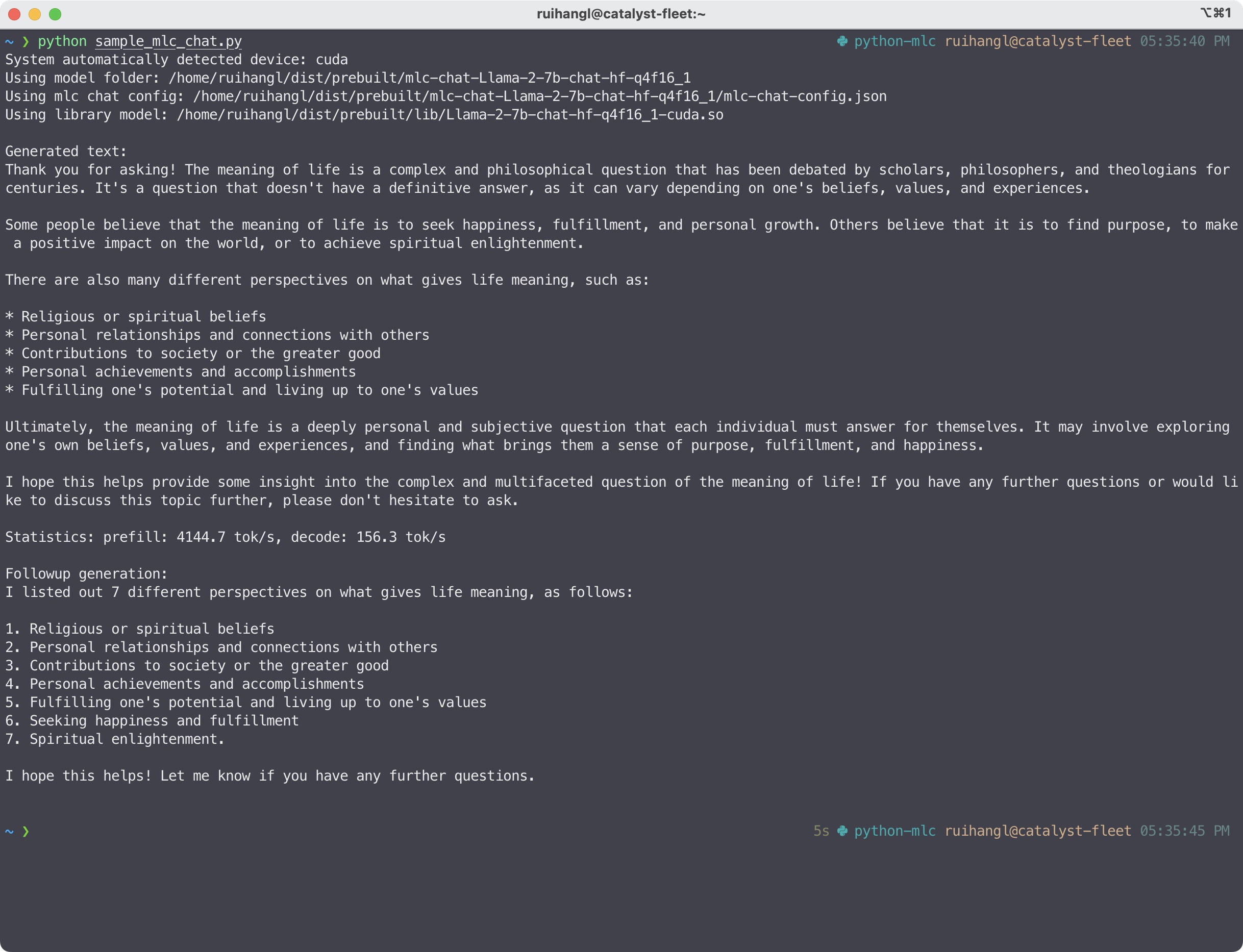

Run in Python. The following Python script showcases the Python API of MLC LLM and its stream capability:

from mlc_chat import ChatModule

from mlc_chat.callback import StreamToStdout

cm = ChatModule(model="Llama-2-7b-chat-hf-q4f16_1")

cm.generate(prompt="What is the meaning of life?", progress_callback=StreamToStdout(callback_interval=2))

Colab walkthrough. A Jupyter notebook on Colab is provided with detailed walkthrough of the Python API.

Documentation and tutorial. Python API reference and its tutorials are available online.

MLC LLM Python API¶

Install MLC Chat CLI. MLC Chat CLI is available via conda using the command below. It is always recommended to install it in an isolated conda virtual environment. For Windows/Linux users, make sure to have latest Vulkan driver installed.

conda create -n mlc-chat-venv -c mlc-ai -c conda-forge mlc-chat-cli-nightly

conda activate mlc-chat-venv

Download pre-quantized weights. The comamnds below download the int4-quantized Llama2-7B from HuggingFace:

git lfs install && mkdir -p dist/prebuilt

git clone https://huggingface.co/mlc-ai/mlc-chat-Llama-2-7b-chat-hf-q4f16_1 \

dist/prebuilt/mlc-chat-Llama-2-7b-chat-hf-q4f16_1

Download pre-compiled model library. The pre-compiled model library is available as below:

git clone https://github.com/mlc-ai/binary-mlc-llm-libs.git dist/prebuilt/lib

Run in command line.

mlc_chat_cli --model Llama-2-7b-chat-hf-q4f16_1

MLC LLM on CLI¶

Note

The MLC Chat CLI package is only built with Vulkan (Windows/Linux) and Metal (macOS). To use other GPU backends such as CUDA and ROCm, please use the prebuilt Python package or build from source.

WebLLM. MLC LLM generates performant code for WebGPU and WebAssembly, so that LLMs can be run locally in a web browser without server resources.

Download pre-quantized weights. This step is self-contained in WebLLM.

Download pre-compiled model library. WebLLM automatically downloads WebGPU code to execute.

Check browser compatibility. The latest Google Chrome provides WebGPU runtime and WebGPU Report as a useful tool to verify WebGPU capabilities of your browser.

MLC LLM on Web¶

Install MLC Chat iOS. It is available on AppStore:

Requirement. Llama2-7B model needs an iOS device with a minimum of 6GB RAM, whereas the RedPajama-3B model runs with at least 4GB RAM.

Tutorial and source code. The source code of the iOS app is fully open source, and a tutorial is included in documentation.

MLC Chat on iOS¶

Install MLC Chat Android. A prebuilt is available as an APK:

Requirement. Llama2-7B model needs an iOS device with a minimum of 6GB RAM, whereas the RedPajama-3B model runs with at least 4GB RAM. The demo is tested on

Samsung S23 with Snapdragon 8 Gen 2 chip

Redmi Note 12 Pro with Snapdragon 685

Google Pixel phones

Tutorial and source code. The source code of the iOS app is fully open source, and a tutorial is included in documentation.

MLC LLM on Android¶