Eclipse Jetty Programming Guide

The Eclipse Jetty Programming Guide targets developers who want to use the Jetty libraries in their applications.

The Jetty libraries provide the client-side and server-side APIs to work with various web protocols such as HTTP/1.1, HTTP/2, HTTP/3, WebSocket and FastCGI.

You may use the Jetty client-side library in your application to make calls to third party REST services, or to other REST microservices in your system.

Likewise, you may use the Jetty server-side library to quickly create an HTTP or REST service without having to create a web application archive file (a *.war file) and without having to deploy it to a Jetty standalone server that you would have to download and install.

This guide will walk you through the design of the Jetty libraries and how to use its classes to write your applications.

Client Libraries

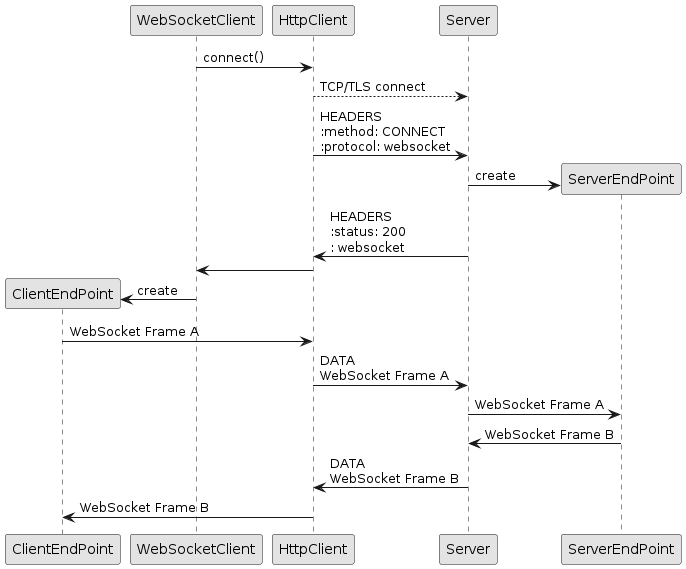

The Eclipse Jetty Project provides client-side libraries that allow you to embed an HTTP or WebSocket client in your applications. A typical example is a client application that needs to contact a third party service via HTTP (for example a REST service). Another example is a proxy application that receives HTTP requests and forwards them as FCGI requests to a PHP application such as WordPress, or receives HTTP/1.1 requests and converts them to HTTP/2 or HTTP/3. Yet another example is a client application that needs to receive events from a WebSocket server.

The client libraries are designed to be non-blocking and offer both synchronous and asynchronous APIs and come with many configuration options.

These are the available client libraries:

-

The High-Level HTTP Client Library for HTTP/1.1, HTTP/2, HTTP/3 and FastCGI

-

The Low-Level HTTP/2 Client Library for low-level HTTP/2

-

The Low-Level HTTP/3 Client Library for low-level HTTP/3

If you are interested in the low-level details of how the Jetty client libraries work, or are interested in writing a custom protocol, look at the Client I/O Architecture.

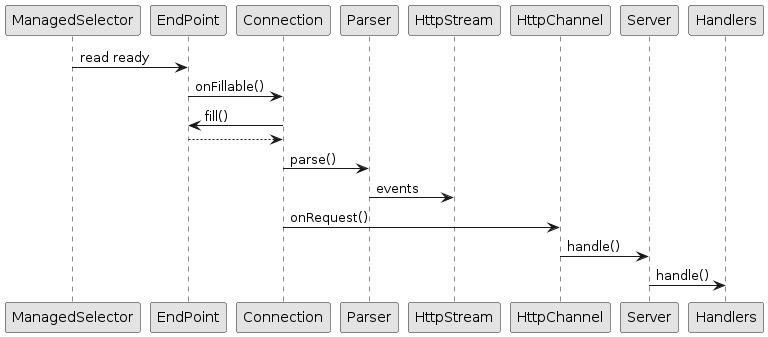

I/O Architecture

The Jetty client libraries provide the basic components and APIs to implement a client application.

They build on the common Jetty I/O Architecture and provide client specific concepts (such as establishing a connection to a server).

There are conceptually two layers that compose the Jetty client libraries:

-

The transport layer, that handles the low-level communication with the server, and deals with buffers, threads, etc.

-

The protocol layer, that handles the high-level protocol by parsing the bytes read from the transport layer and by generating the bytes to write to the transport layer.

Transport Layer

The transport layer is the low-level layer that communicates with the server.

Protocols such as HTTP/1.1 and HTTP/2 are typically transported over TCP, while the newer HTTP/3 is transported over QUIC, which is itself transported over UDP.

However, there are other means of communication supported by the Jetty client libraries, in particular over Unix-Domain sockets (for inter-process communication), and over memory (for intra-process communication).

The same high-level protocol can be carried by different low-level transports. For example, the high-level HTTP/1.1 protocol can be transported over either TCP (the default), or QUIC, or Unix-Domain sockets, or memory, because all these low-level transport provide reliable and ordered communication between client and server.

Similarly, the high-level HTTP/3 protocol can be transported over either QUIC (the default) or memory.

It would be possible to transport HTTP/3 also over Unix-Domain sockets, but the current version of Java only supports Unix-Domain sockets for SocketChannels and not for DatagramChannels.

The Jetty client libraries use the common I/O design described in this section.

The common I/O components and concepts are used for all low-level transports.

The only partial exception is the memory transport, which is not based on network components; as such it does not need a SelectorManager, but it exposes EndPoint so that high-level protocols have a common interface to interact with the low-level transport.

The client-side abstraction for the low-level transport is org.eclipse.jetty.io.Transport.

Transport represents how high-level protocols can be transported; there is Transport.TCP_IP that represents communication over TCP, but also Transport.TCPUnix for Unix-Domain sockets, QuicTransport for QUIC and MemoryTransport for memory.

Applications can specify the Transport to use for each request as described in this section.

When the Transport implementation uses the network, it delegates to org.eclipse.jetty.io.ClientConnector.

ClientConnector primarily wraps org.eclipse.jetty.io.SelectorManager to provide network functionalities, and aggregates other four components:

-

a thread pool (in form of an

java.util.concurrent.Executor) -

a scheduler (in form of

org.eclipse.jetty.util.thread.Scheduler) -

a byte buffer pool (in form of

org.eclipse.jetty.io.ByteBufferPool) -

a TLS factory (in form of

org.eclipse.jetty.util.ssl.SslContextFactory.Client)

The ClientConnector is where you want to set those components after you have configured them.

If you don’t explicitly set those components on the ClientConnector, then appropriate defaults will be chosen when the ClientConnector starts.

ClientConnector manages all network-related components, and therefore it is used for TCP, UDP, QUIC and Unix-Domain sockets.

The simplest example that creates and starts a ClientConnector is the following:

ClientConnector clientConnector = new ClientConnector();

clientConnector.start();A more typical example:

// Create and configure the SslContextFactory.

SslContextFactory.Client sslContextFactory = new SslContextFactory.Client();

sslContextFactory.addExcludeProtocols("TLSv1", "TLSv1.1");

// Create and configure the thread pool.

QueuedThreadPool threadPool = new QueuedThreadPool();

threadPool.setName("client");

// Create and configure the ClientConnector.

ClientConnector clientConnector = new ClientConnector();

clientConnector.setSslContextFactory(sslContextFactory);

clientConnector.setExecutor(threadPool);

clientConnector.start();A more advanced example that customizes the ClientConnector by overriding some of its methods:

class CustomClientConnector extends ClientConnector

{

@Override

protected SelectorManager newSelectorManager()

{

return new ClientSelectorManager(getExecutor(), getScheduler(), getSelectors())

{

@Override

protected void endPointOpened(EndPoint endpoint)

{

System.getLogger("endpoint").log(INFO, "opened %s", endpoint);

}

@Override

protected void endPointClosed(EndPoint endpoint)

{

System.getLogger("endpoint").log(INFO, "closed %s", endpoint);

}

};

}

}

// Create and configure the thread pool.

QueuedThreadPool threadPool = new QueuedThreadPool();

threadPool.setName("client");

// Create and configure the scheduler.

Scheduler scheduler = new ScheduledExecutorScheduler("scheduler-client", false);

// Create and configure the custom ClientConnector.

CustomClientConnector clientConnector = new CustomClientConnector();

clientConnector.setExecutor(threadPool);

clientConnector.setScheduler(scheduler);

clientConnector.start();Since ClientConnector is the component that handles the low-level network transport, it is also the component where you want to configure the low-level network configuration.

The most common parameters are:

-

ClientConnector.selectors: the number ofjava.nio.Selectors components (defaults to1) that are present to handle theSocketChannels andDatagramChannels opened by theClientConnector. You typically want to increase the number of selectors only for those use cases where each selector should handle more than few hundreds concurrent socket events. For example, one selector typically runs well for250concurrent socket events; as a rule of thumb, you can multiply that number by10to obtain the number of opened sockets a selector can handle (2500), based on the assumption that not all the2500sockets will be active at the same time. -

ClientConnector.idleTimeout: the duration of time after whichClientConnectorcloses a socket due to inactivity (defaults to30seconds). This is an important parameter to configure, and you typically want the client idle timeout to be shorter than the server idle timeout, to avoid race conditions where the client attempts to use a socket just before the client-side idle timeout expires, but the server-side idle timeout has already expired and the is already closing the socket. -

ClientConnector.connectBlocking: whether the operation of connecting a socket to the server (i.e.SocketChannel.connect(SocketAddress)) must be a blocking or a non-blocking operation (defaults tofalse). Forlocalhostor same datacenter hosts you want to set this parameter totruebecause DNS resolution will be immediate (and likely never fail). For generic Internet hosts (e.g. when you are implementing a web spider) you want to set this parameter tofalse. -

ClientConnector.connectTimeout: the duration of time after whichClientConnectoraborts a connection attempt to the server (defaults to5seconds). This time includes the DNS lookup time and the TCP connect time.

Please refer to the ClientConnector javadocs for the complete list of configurable parameters.

Unix-Domain Support

JEP 380 introduced Unix-Domain sockets support in Java 16, on all operative systems, but only for SocketChannels (not for DatagramChannels).

ClientConnector handles Unix-Domain sockets exactly like it handles regular TCP sockets, so there is no additional configuration necessary — Unix-Domain sockets are supported out-of-the-box.

Applications can specify the Transport to use for each request as described in this section.

Memory Support

In addition to support communication between client and server via network or Unix-Domain, the Jetty client libraries also support communication between client and server via memory for intra-process communication. This means that the client and server must be in the same JVM process.

This functionality is provided by org.eclipse.jetty.server.MemoryTransport, which does not delegate to ClientConnector, but instead delegates to the server-side MemoryConnector and its related classes.

Applications can specify the Transport to use for each request as described in this section.

Protocol Layer

The protocol layer builds on top of the transport layer to generate the bytes to be written to the low-level transport and to parse the bytes read from the low-level transport.

Recall from this section that Jetty uses the Connection abstraction to produce and interpret the low-level transport bytes.

On the client side, a ClientConnectionFactory implementation is the component that creates Connection instances based on the protocol that the client wants to "speak" with the server.

Applications may use ClientConnector.connect(SocketAddress, Map<String, Object>) to establish a TCP connection to the server, and must provide ClientConnector with the following information in the context map:

-

A

Transportinstance that specifies the low-level transport to use. -

A

ClientConnectionFactorythat createsConnectioninstances for the high-level protocol. -

A

Promisethat is notified when the connection creation succeeds or fails.

For example:

class CustomConnection extends AbstractConnection

{

public CustomConnection(EndPoint endPoint, Executor executor)

{

super(endPoint, executor);

}

@Override

public void onOpen()

{

super.onOpen();

System.getLogger("connection").log(INFO, "Opened connection {0}", this);

}

@Override

public void onFillable()

{

}

}

ClientConnector clientConnector = new ClientConnector();

clientConnector.start();

String host = "serverHost";

int port = 8080;

SocketAddress address = new InetSocketAddress(host, port);

// The Transport instance.

Transport transport = Transport.TCP_IP;

// The ClientConnectionFactory that creates CustomConnection instances.

ClientConnectionFactory connectionFactory = (endPoint, context) ->

{

System.getLogger("connection").log(INFO, "Creating connection for {0}", endPoint);

return new CustomConnection(endPoint, clientConnector.getExecutor());

};

// The Promise to notify of connection creation success or failure.

CompletableFuture<CustomConnection> connectionPromise = new Promise.Completable<>();

// Populate the context with the mandatory keys to create and obtain connections.

Map<String, Object> context = new ConcurrentHashMap<>();

context.put(Transport.class.getName(), transport);

context.put(ClientConnector.CLIENT_CONNECTION_FACTORY_CONTEXT_KEY, connectionFactory);

context.put(ClientConnector.CONNECTION_PROMISE_CONTEXT_KEY, connectionPromise);

clientConnector.connect(address, context);

// Use the Connection when it's available.

// Use it in a non-blocking way via CompletableFuture APIs.

connectionPromise.whenComplete((connection, failure) ->

{

System.getLogger("connection").log(INFO, "Created connection for {0}", connection);

});

// Alternatively, you can block waiting for the connection (or a failure).

// CustomConnection connection = connectionPromise.get();When a Connection is created successfully, its onOpen() method is invoked, and then the promise is completed successfully.

It is now possible to write a super-simple telnet client that reads and writes string lines:

class TelnetConnection extends AbstractConnection

{

private final ByteArrayOutputStream bytes = new ByteArrayOutputStream();

private Consumer<String> consumer;

public TelnetConnection(EndPoint endPoint, Executor executor)

{

super(endPoint, executor);

}

@Override

public void onOpen()

{

super.onOpen();

// Declare interest for fill events.

fillInterested();

}

@Override

public void onFillable()

{

try

{

ByteBuffer buffer = BufferUtil.allocate(1024);

while (true)

{

int filled = getEndPoint().fill(buffer);

if (filled > 0)

{

while (buffer.hasRemaining())

{

// Search for newline.

byte read = buffer.get();

if (read == '\n')

{

// Notify the consumer of the line.

consumer.accept(bytes.toString(StandardCharsets.UTF_8));

bytes.reset();

}

else

{

bytes.write(read);

}

}

}

else if (filled == 0)

{

// No more bytes to fill, declare

// again interest for fill events.

fillInterested();

return;

}

else

{

// The other peer closed the

// connection, close it back.

getEndPoint().close();

return;

}

}

}

catch (Exception x)

{

getEndPoint().close(x);

}

}

public void onLine(Consumer<String> consumer)

{

this.consumer = consumer;

}

public void writeLine(String line, Callback callback)

{

line = line + "\r\n";

getEndPoint().write(callback, ByteBuffer.wrap(line.getBytes(StandardCharsets.UTF_8)));

}

}

ClientConnector clientConnector = new ClientConnector();

clientConnector.start();

String host = "example.org";

int port = 80;

SocketAddress address = new InetSocketAddress(host, port);

ClientConnectionFactory connectionFactory = (endPoint, context) ->

new TelnetConnection(endPoint, clientConnector.getExecutor());

CompletableFuture<TelnetConnection> connectionPromise = new Promise.Completable<>();

Map<String, Object> context = new HashMap<>();

context.put(Transport.class.getName(), Transport.TCP_IP);

context.put(ClientConnector.CLIENT_CONNECTION_FACTORY_CONTEXT_KEY, connectionFactory);

context.put(ClientConnector.CONNECTION_PROMISE_CONTEXT_KEY, connectionPromise);

clientConnector.connect(address, context);

connectionPromise.whenComplete((connection, failure) ->

{

if (failure == null)

{

// Register a listener that receives string lines.

connection.onLine(line -> System.getLogger("app").log(INFO, "line: {0}", line));

// Write a line.

connection.writeLine("GET / HTTP/1.0\r\n", Callback.NOOP);

}

else

{

failure.printStackTrace();

}

});Note how a very basic "telnet" API that applications could use is implemented in the form of the onLine(Consumer<String>) for the non-blocking receiving side and writeLine(String, Callback) for the non-blocking sending side.

Note also how the onFillable() method implements some basic "parsing" by looking up the \n character in the buffer.

| The "telnet" client above looks like a super-simple HTTP client because HTTP/1.0 can be seen as a line-based protocol. HTTP/1.0 was used just as an example, but we could have used any other line-based protocol such as SMTP, provided that the server was able to understand it. |

This is very similar to what the Jetty client implementation does for real network protocols. Real network protocols are of course more complicated and so is the implementation code that handles them, but the general ideas are similar.

The Jetty client implementation provides a number of ClientConnectionFactory implementations that can be composed to produce and interpret the network bytes.

For example, it is simple to modify the above example to use the TLS protocol so that you will be able to connect to the server on port 443, typically reserved for the secure HTTP protocol.

The differences between the clear-text version and the TLS encrypted version are minimal:

class TelnetConnection extends AbstractConnection

{

private final ByteArrayOutputStream bytes = new ByteArrayOutputStream();

private Consumer<String> consumer;

public TelnetConnection(EndPoint endPoint, Executor executor)

{

super(endPoint, executor);

}

@Override

public void onOpen()

{

super.onOpen();

// Declare interest for fill events.

fillInterested();

}

@Override

public void onFillable()

{

try

{

ByteBuffer buffer = BufferUtil.allocate(1024);

while (true)

{

int filled = getEndPoint().fill(buffer);

if (filled > 0)

{

while (buffer.hasRemaining())

{

// Search for newline.

byte read = buffer.get();

if (read == '\n')

{

// Notify the consumer of the line.

consumer.accept(bytes.toString(StandardCharsets.UTF_8));

bytes.reset();

}

else

{

bytes.write(read);

}

}

}

else if (filled == 0)

{

// No more bytes to fill, declare

// again interest for fill events.

fillInterested();

return;

}

else

{

// The other peer closed the

// connection, close it back.

getEndPoint().close();

return;

}

}

}

catch (Exception x)

{

getEndPoint().close(x);

}

}

public void onLine(Consumer<String> consumer)

{

this.consumer = consumer;

}

public void writeLine(String line, Callback callback)

{

line = line + "\r\n";

getEndPoint().write(callback, ByteBuffer.wrap(line.getBytes(StandardCharsets.UTF_8)));

}

}

ClientConnector clientConnector = new ClientConnector();

clientConnector.start();

// Use port 443 to contact the server using encrypted HTTP.

String host = "example.org";

int port = 443;

SocketAddress address = new InetSocketAddress(host, port);

ClientConnectionFactory connectionFactory = (endPoint, context) ->

new TelnetConnection(endPoint, clientConnector.getExecutor());

// Wrap the "telnet" ClientConnectionFactory with the SslClientConnectionFactory.

connectionFactory = new SslClientConnectionFactory(clientConnector.getSslContextFactory(),

clientConnector.getByteBufferPool(), clientConnector.getExecutor(), connectionFactory);

// We will obtain a SslConnection now.

CompletableFuture<SslConnection> connectionPromise = new Promise.Completable<>();

Map<String, Object> context = new ConcurrentHashMap<>();

context.put(Transport.class.getName(), Transport.TCP_IP);

context.put(ClientConnector.CLIENT_CONNECTION_FACTORY_CONTEXT_KEY, connectionFactory);

context.put(ClientConnector.CONNECTION_PROMISE_CONTEXT_KEY, connectionPromise);

clientConnector.connect(address, context);

connectionPromise.whenComplete((sslConnection, failure) ->

{

if (failure == null)

{

// Unwrap the SslConnection to access the "line" APIs in TelnetConnection.

TelnetConnection connection = (TelnetConnection)sslConnection.getSslEndPoint().getConnection();

// Register a listener that receives string lines.

connection.onLine(line -> System.getLogger("app").log(INFO, "line: {0}", line));

// Write a line.

connection.writeLine("GET / HTTP/1.0\r\n", Callback.NOOP);

}

else

{

failure.printStackTrace();

}

});The differences with the clear-text version are only:

-

Change the port from

80to443. -

Wrap the

ClientConnectionFactorywithSslClientConnectionFactory. -

Unwrap the

SslConnectionto accessTelnetConnection.

HTTP Client

HttpClient Introduction

The Jetty HTTP client module provides easy-to-use APIs and utility classes to perform HTTP (or HTTPS) requests.

Jetty’s HTTP client is non-blocking and asynchronous. It offers an asynchronous API that never blocks for I/O, making it very efficient in thread utilization and well suited for high performance scenarios such as load testing or parallel computation.

However, when all you need to do is to perform a GET request to a resource, Jetty’s HTTP client offers also a synchronous API; a programming interface where the thread that issued the request blocks until the request/response conversation is complete.

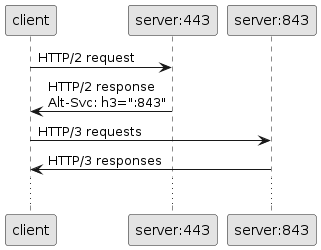

Jetty’s HTTP client supports different HTTP formats: HTTP/1.1, HTTP/2, HTTP/3 and FastCGI.

Each format has a different HttpClientTransport implementation, that in turn use a low-level transport to communicate with the server.

This means that the semantic of an HTTP request such as: " GET the resource /index.html " can be carried over the low-level transport in different formats.

The most common and default format is HTTP/1.1.

That said, Jetty’s HTTP client can carry the same request using the HTTP/2 format, the HTTP/3 format, or the FastCGI format.

Furthermore, every format can be transported over different low-level transport, such as TCP, Unix-Domain sockets, QUIC or memory. Supports for Unix-Domain sockets requires Java 16 or later, since Unix-Domain sockets support has been introduced in OpenJDK with JEP 380.

The FastCGI format is used in Jetty’s FastCGI support that allows Jetty to work as a reverse proxy to PHP (exactly like Apache or Nginx do) and therefore be able to serve, for example, WordPress websites, often in conjunction with Unix-Domain sockets (although it is possible to use FastCGI via network too).

The HTTP/2 format allows Jetty’s HTTP client to perform requests using HTTP/2 to HTTP/2 enabled websites, see also Jetty’s HTTP/2 support.

The HTTP/3 format allows Jetty’s HTTP client to perform requests using HTTP/3 to HTTP/3 enabled websites, see also Jetty’s HTTP/3 support.

Out of the box features that you get with the Jetty HTTP client include:

-

Redirect support — redirect codes such as 302 or 303 are automatically followed.

-

Cookies support — cookies sent by servers are stored and sent back to servers in matching requests.

-

Authentication support — HTTP "Basic", "Digest" and "SPNEGO" authentications are supported, others are pluggable.

-

Forward proxy support — HTTP proxying, SOCKS4 and SOCKS5 proxying.

Starting HttpClient

The Jetty artifact that provides the main HTTP client implementation is jetty-client.

The Maven artifact coordinates are the following:

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-client</artifactId>

<version>12.0.9-SNAPSHOT</version>

</dependency>The main class is named org.eclipse.jetty.client.HttpClient.

You can think of a HttpClient instance as a browser instance.

Like a browser it can make requests to different domains, it manages redirects, cookies and authentication, you can configure it with a forward proxy, and it provides you with the responses to the requests you make.

In order to use HttpClient, you must instantiate it, configure it, and then start it:

// Instantiate HttpClient.

HttpClient httpClient = new HttpClient();

// Configure HttpClient, for example:

httpClient.setFollowRedirects(false);

// Start HttpClient.

httpClient.start();You may create multiple instances of HttpClient, but typically one instance is enough for an application.

There are several reasons for having multiple HttpClient instances including, but not limited to:

-

You want to specify different configuration parameters (for example, one instance is configured with a forward proxy while another is not).

-

You want the two instances to behave like two different browsers and hence have different cookies, different authentication credentials, etc.

-

You want to use different

HttpClientTransports.

Like browsers, HTTPS requests are supported out-of-the-box (see this section for the TLS configuration), as long as the server provides a valid certificate.

In case the server does not provide a valid certificate (or in case it is self-signed) you want to customize HttpClient's TLS configuration as described in this section.

Stopping HttpClient

It is recommended that when your application stops, you also stop the HttpClient instance (or instances) that you are using.

// Stop HttpClient.

httpClient.stop();Stopping HttpClient makes sure that the memory it holds (for example, ByteBuffer pools, authentication credentials, cookies, etc.) is released, and that the thread pool and scheduler are properly stopped allowing all threads used by HttpClient to exit.

|

You cannot call |

HttpClient Architecture

A HttpClient instance can be thought as a browser instance, and it manages the following components:

-

A

CookieStore(see this section). -

A

AuthenticationStore(see this section). -

A

ProxyConfiguration(see this section). -

A set of

Destinations

A Destination is the client-side component that represents an origin server, and manages a queue of requests for that origin, and a pool of connections to that origin.

An origin may be simply thought as the tuple (scheme, host, port) and it is where the client connects to in order to communicate with the server.

However, this is not enough.

If you use HttpClient to write a proxy you may have different clients that want to contact the same server.

In this case, you may not want to use the same proxy-to-server connection to proxy requests for both clients, for example for authentication reasons: the server may associate the connection with authentication credentials, and you do not want to use the same connection for two different users that have different credentials.

Instead, you want to use different connections for different clients and this can be achieved by "tagging" a destination with a tag object that represents the remote client (for example, it could be the remote client IP address).

Two origins with the same (scheme, host, port) but different tag create two different destinations and therefore two different connection pools.

However, also this is not enough.

It is possible for a server to speak different protocols on the same port.

A connection may start by speaking one protocol, for example HTTP/1.1, but then be upgraded to speak a different protocol, for example HTTP/2. After a connection has been upgraded to a second protocol, it cannot speak the first protocol anymore, so it can only be used to communicate using the second protocol.

Two origins with the same (scheme, host, port, tag) but different protocol create two different destinations and therefore two different connection pools.

Finally, it is possible for a server to speak the same protocol over different low-level transports (represented by Transport), for example TCP and Unix-Domain.

Two origins with the same (scheme, host, port, tag, protocol) but different low-level transports create two different destinations and therefore two different connection pools.

Therefore, an origin is identified by the tuple (scheme, host, port, tag, protocol, transport).

HttpClient Connection Pooling

A Destination manages a org.eclipse.jetty.client.ConnectionPool, where connections to a particular origin are pooled for performance reasons: opening a connection is a costly operation, and it’s better to reuse them for multiple requests.

Remember that to select a specific Destination you must select a specific origin, and that an origin is identified by the tuple (scheme, host, port, tag, protocol, transport), so you can have multiple Destinations for the same host and port, and therefore multiple ConnectionPools

|

You can access the ConnectionPool in this way:

HttpClient httpClient = new HttpClient();

httpClient.start();

ConnectionPool connectionPool = httpClient.getDestinations().stream()

// Find the destination by filtering on the Origin.

.filter(destination -> destination.getOrigin().getAddress().getHost().equals("domain.com"))

.findAny()

// Get the ConnectionPool.

.map(Destination::getConnectionPool)

.orElse(null);Jetty’s client library provides the following ConnectionPool implementations:

-

DuplexConnectionPool, historically the first implementation, only used by the HTTP/1.1 transport. -

MultiplexConnectionPool, the generic implementation valid for any transport where connections are reused with a most recently used algorithm (that is, the connections most recently returned to the connection pool are the more likely to be used again). -

RoundRobinConnectionPool, similar toMultiplexConnectionPoolbut where connections are reused with a round-robin algorithm. -

RandomRobinConnectionPool, similar toMultiplexConnectionPoolbut where connections are reused with an algorithm that chooses them randomly.

The ConnectionPool implementation can be customized for each destination in by setting a ConnectionPool.Factory on the HttpClientTransport:

HttpClient httpClient = new HttpClient();

httpClient.start();

// The max number of connections in the pool.

int maxConnectionsPerDestination = httpClient.getMaxConnectionsPerDestination();

// The max number of requests per connection (multiplexing).

// Start with 1, since this value is dynamically set to larger values if

// the transport supports multiplexing requests on the same connection.

int maxRequestsPerConnection = 1;

HttpClientTransport transport = httpClient.getTransport();

// Set the ConnectionPool.Factory using a lambda.

transport.setConnectionPoolFactory(destination ->

new RoundRobinConnectionPool(destination,

maxConnectionsPerDestination,

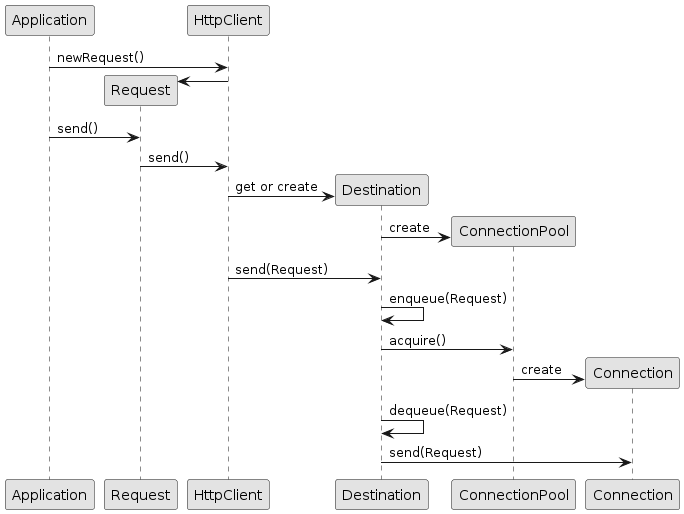

maxRequestsPerConnection));HttpClient Request Processing

When a request is sent, an origin is computed from the request; HttpClient uses that origin to find (or create if it does not exist) the correspondent destination.

The request is then queued onto the destination, and this causes the destination to ask its connection pool for a free connection.

If a connection is available, it is returned, otherwise a new connection is created.

Once the destination has obtained the connection, it dequeues the request and sends it over the connection.

The first request to a destination triggers the opening of the first connection.

A second request with the same origin sent after the first request/response cycle is completed may reuse the same connection, depending on the connection pool implementation.

A second request with the same origin sent concurrently with the first request will likely cause the opening of a second connection, depending on the connection pool implementation.

The configuration parameter HttpClient.maxConnectionsPerDestination (see also the configuration section) controls the max number of connections that can be opened for a destination.

| If opening connections to a given origin takes a long time, then requests for that origin will queue up in the corresponding destination until the connections are established. |

Each connection can handle a limited number of concurrent requests.

For HTTP/1.1, this number is always 1: there can only be one outstanding request for each connection.

For HTTP/2 this number is determined by the server max_concurrent_stream setting (typically around 100, i.e. there can be up to 100 outstanding requests for every connection).

When a destination has maxed out its number of connections, and all connections have maxed out their number of outstanding requests, more requests sent to that destination will be queued.

When the request queue is full, the request will be failed.

The configuration parameter HttpClient.maxRequestsQueuedPerDestination (see also the configuration section) controls the max number of requests that can be queued for a destination.

HttpClient API Usage

HttpClient provides two types of APIs: a blocking API and a non-blocking API.

HttpClient Blocking APIs

The simpler way to perform a HTTP request is the following:

HttpClient httpClient = new HttpClient();

httpClient.start();

// Perform a simple GET and wait for the response.

ContentResponse response = httpClient.GET("http://domain.com/path?query");The method HttpClient.GET(...) performs a HTTP GET request to the given URI and returns a ContentResponse when the request/response conversation completes successfully.

The ContentResponse object contains the HTTP response information: status code, headers and possibly content.

The content length is limited by default to 2 MiB; for larger content see the section on response content handling.

If you want to customize the request, for example by issuing a HEAD request instead of a GET, and simulating a browser user agent, you can do it in this way:

ContentResponse response = httpClient.newRequest("http://domain.com/path?query")

.method(HttpMethod.HEAD)

.agent("Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:17.0) Gecko/20100101 Firefox/17.0")

.send();This is a shorthand for:

Request request = httpClient.newRequest("http://domain.com/path?query");

request.method(HttpMethod.HEAD);

request.agent("Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:17.0) Gecko/20100101 Firefox/17.0");

ContentResponse response = request.send();You first create a request object using httpClient.newRequest(...), and then you customize it using the fluent API style (that is, a chained invocation of methods on the request object).

When the request object is customized, you call request.send() that produces the ContentResponse when the request/response conversation is complete.

The Request object, despite being mutable, cannot be reused for other requests.

This is true also when trying to send two or more identical requests: you have to create two or more Request objects.

|

Simple POST requests also have a shortcut method:

ContentResponse response = httpClient.POST("http://domain.com/entity/1")

.param("p", "value")

.send();The POST parameter values added via the param() method are automatically URL-encoded.

Jetty’s HttpClient automatically follows redirects, so it handles the typical web pattern POST/Redirect/GET, and the response object contains the content of the response of the GET request.

Following redirects is a feature that you can enable/disable on a per-request basis or globally.

File uploads also require one line, and make use of java.nio.file classes:

ContentResponse response = httpClient.POST("http://domain.com/upload")

.file(Paths.get("file_to_upload.txt"), "text/plain")

.send();It is possible to impose a total timeout for the request/response conversation using the Request.timeout(...) method as follows:

ContentResponse response = httpClient.newRequest("http://domain.com/path?query")

.timeout(5, TimeUnit.SECONDS)

.send();In the example above, when the 5 seconds expire, the request/response cycle is aborted and a java.util.concurrent.TimeoutException is thrown.

HttpClient Non-Blocking APIs

So far we have shown how to use Jetty HTTP client in a blocking style — that is, the thread that issues the request blocks until the request/response conversation is complete.

This section will look at Jetty’s HttpClient non-blocking, asynchronous APIs that are perfectly suited for large content downloads, for parallel processing of requests/responses and in cases where performance and efficient thread and resource utilization is a key factor.

The asynchronous APIs rely heavily on listeners that are invoked at various stages of request and response processing. These listeners are implemented by applications and may perform any kind of logic. The implementation invokes these listeners in the same thread that is used to process the request or response. Therefore, if the application code in these listeners takes a long time to execute, the request or response processing is delayed until the listener returns.

If you need to execute application code that takes long time inside a listener, it is typically better to spawn your own thread to execute the code that takes long time. In this way you return from the listener as soon as possible and allow the implementation to resume the processing of the request or response (or of other requests/responses).

Request and response processing are executed by two different threads and therefore may happen concurrently. A typical example of this concurrent processing is an echo server, where a large upload may be concurrent with the large download echoed back.

| Remember that responses may be processed and completed before requests; a typical example is a large upload that triggers a quick response, for example an error, by the server: the response may arrive and be completed while the request content is still being uploaded. |

The application thread that calls Request.send(Response.CompleteListener) performs the processing of the request until either the request is fully sent over the network or until it would block on I/O, then it returns (and therefore never blocks).

If it would block on I/O, the thread asks the I/O system to emit an event when the I/O will be ready to continue, then returns.

When such an event is fired, a thread taken from the HttpClient thread pool will resume the processing of the request.

Response are processed from the I/O thread taken from the HttpClient thread pool that processes the event that bytes are ready to be read.

Response processing continues until either the response is fully processed or until it would block for I/O.

If it would block for I/O, the thread asks the I/O system to emit an event when the I/O will be ready to continue, then returns.

When such an event is fired, a (possibly different) thread taken from the HttpClient thread pool will resume the processing of the response.

When the request and the response are both fully processed, the thread that finished the last processing (usually the thread that processes the response, but may also be the thread that processes the request — if the request takes more time than the response to be processed) is used to dequeue the next request for the same destination and to process it.

A simple non-blocking GET request that discards the response content can be written in this way:

httpClient.newRequest("http://domain.com/path")

.send(result ->

{

// Your logic here

});Method Request.send(Response.CompleteListener) returns void and does not block; the Response.CompleteListener lambda provided as a parameter is notified when the request/response conversation is complete, and the Result parameter allows you to access the request and response objects as well as failures, if any.

You can impose a total timeout for the request/response conversation in the same way used by the synchronous API:

httpClient.newRequest("http://domain.com/path")

.timeout(3, TimeUnit.SECONDS)

.send(result ->

{

/* Your logic here */

});The example above will impose a total timeout of 3 seconds on the request/response conversation.

The HTTP client APIs use listeners extensively to provide hooks for all possible request and response events:

httpClient.newRequest("http://domain.com/path")

// Add request hooks.

.onRequestQueued(request -> { /* ... */ })

.onRequestBegin(request -> { /* ... */ })

.onRequestHeaders(request -> { /* ... */ })

.onRequestCommit(request -> { /* ... */ })

.onRequestContent((request, content) -> { /* ... */ })

.onRequestFailure((request, failure) -> { /* ... */ })

.onRequestSuccess(request -> { /* ... */ })

// Add response hooks.

.onResponseBegin(response -> { /* ... */ })

.onResponseHeader((response, field) -> true)

.onResponseHeaders(response -> { /* ... */ })

.onResponseContentAsync((response, chunk, demander) -> demander.run())

.onResponseFailure((response, failure) -> { /* ... */ })

.onResponseSuccess(response -> { /* ... */ })

// Result hook.

.send(result -> { /* ... */ });This makes Jetty HTTP client suitable for HTTP load testing because, for example, you can accurately time every step of the request/response conversation (thus knowing where the request/response time is really spent).

|

The code in request and response listeners should not block. It is allowed to call other blocking APIs, such as the Java file-system APIs. You should not call blocking APIs that:

If the listener code blocks, the implementation also will be blocked and will not be able to advance the processing of the request or response that the listener code is likely waiting for, causing a deadlock. |

Have a look at the Request.Listener class to know about request events, and to the Response.Listener class to know about response events.

Request Content Handling

Jetty’s HttpClient provides a number of utility classes off the shelf to handle request content.

You can provide request content as String, byte[], ByteBuffer, java.nio.file.Path, InputStream, and provide your own implementation of org.eclipse.jetty.client.Request.Content.

Here’s an example that provides the request content using java.nio.file.Paths:

ContentResponse response = httpClient.POST("http://domain.com/upload")

.body(new PathRequestContent("text/plain", Paths.get("file_to_upload.txt")))

.send();Alternatively, you can use FileInputStream via the InputStreamRequestContent utility class:

ContentResponse response = httpClient.POST("http://domain.com/upload")

.body(new InputStreamRequestContent("text/plain", new FileInputStream("file_to_upload.txt")))

.send();Since InputStream is blocking, then also the send of the request will block if the input stream blocks, even in case of usage of the non-blocking HttpClient APIs.

If you have already read the content in memory, you can pass it as a byte[] (or a String) using the BytesRequestContent (or StringRequestContent) utility class:

ContentResponse bytesResponse = httpClient.POST("http://domain.com/upload")

.body(new BytesRequestContent("text/plain", bytes))

.send();

ContentResponse stringResponse = httpClient.POST("http://domain.com/upload")

.body(new StringRequestContent("text/plain", string))

.send();If the request content is not immediately available, but your application will be notified of the content to send, you can use AsyncRequestContent in this way:

AsyncRequestContent content = new AsyncRequestContent();

httpClient.POST("http://domain.com/upload")

.body(content)

.send(result ->

{

// Your logic here

});

// Content not available yet here.

// An event happens in some other class, in some other thread.

class ContentPublisher

{

void publish(byte[] bytes, boolean lastContent)

{

// Wrap the bytes into a new ByteBuffer.

ByteBuffer buffer = ByteBuffer.wrap(bytes);

// Write the content.

content.write(buffer, Callback.NOOP);

// Close AsyncRequestContent when all the content is arrived.

if (lastContent)

content.close();

}

}While the request content is awaited and consequently uploaded by the client application, the server may be able to respond (at least with the response headers) completely asynchronously.

In this case, Response.Listener callbacks will be invoked before the request is fully sent.

This allows fine-grained control of the request/response conversation: for example the server may reject contents that are too big, send a response to the client, which in turn may stop the content upload.

Another way to provide request content is by using an OutputStreamRequestContent, which allows applications to write request content when it is available to the OutputStream provided by OutputStreamRequestContent:

OutputStreamRequestContent content = new OutputStreamRequestContent();

// Use try-with-resources to close the OutputStream when all content is written.

try (OutputStream output = content.getOutputStream())

{

httpClient.POST("http://localhost:8080/")

.body(content)

.send(result ->

{

// Your logic here

});

// Content not available yet here.

// Content is now available.

byte[] bytes = new byte[]{'h', 'e', 'l', 'l', 'o'};

output.write(bytes);

}

// End of try-with-resource, output.close() called automatically to signal end of content.Response Content Handling

Jetty’s HttpClient allows applications to handle response content in different ways.

You can buffer the response content in memory; this is done when using the blocking APIs and the content is buffered within a ContentResponse up to 2 MiB.

If you want to control the length of the response content (for example limiting to values smaller than the default of 2 MiB), then you can use a org.eclipse.jetty.client.CompletableResponseListener in this way:

Request request = httpClient.newRequest("http://domain.com/path");

// Limit response content buffer to 512 KiB.

CompletableFuture<ContentResponse> completable = new CompletableResponseListener(request, 512 * 1024)

.send();

// You can attach actions to the CompletableFuture,

// to be performed when the request+response completes.

// Wait at most 5 seconds for request+response to complete.

ContentResponse response = completable.get(5, TimeUnit.SECONDS);If the response content length is exceeded, the response will be aborted, and an exception will be thrown by method get(...).

You can buffer the response content in memory also using the non-blocking APIs, via the BufferingResponseListener utility class:

httpClient.newRequest("http://domain.com/path")

// Buffer response content up to 8 MiB

.send(new BufferingResponseListener(8 * 1024 * 1024)

{

@Override

public void onComplete(Result result)

{

if (!result.isFailed())

{

byte[] responseContent = getContent();

// Your logic here

}

}

});If you want to avoid buffering, you can wait for the response and then stream the content using the InputStreamResponseListener utility class:

InputStreamResponseListener listener = new InputStreamResponseListener();

httpClient.newRequest("http://domain.com/path")

.send(listener);

// Wait for the response headers to arrive.

Response response = listener.get(5, TimeUnit.SECONDS);

// Look at the response before streaming the content.

if (response.getStatus() == HttpStatus.OK_200)

{

// Use try-with-resources to close input stream.

try (InputStream responseContent = listener.getInputStream())

{

// Your logic here

}

}

else

{

response.abort(new IOException("Unexpected HTTP response"));

}Finally, let’s look at the advanced usage of the response content handling.

The response content is provided by the HttpClient implementation to application listeners following the read/demand model of org.eclipse.jetty.io.Content.Source.

The listener that follows this model is Response.ContentSourceListener.

After the response headers have been processed by the HttpClient implementation, Response.ContentSourceListener.onContentSource(response, contentSource) is invoked once and only once.

This allows the application to control precisely the read/demand loop: when to read a chunk, how to process it and when to demand the next one.

You must provide a ContentSourceListener whose implementation reads a Content.Chunk from the provided Content.Source, as explained in this section.

The invocation of onContentSource(Request, Content.Source) and of the demand callback passed to contentSource.demand(Runnable) are serialized with respect to asynchronous events such as timeouts or an asynchronous call to Request.abort(Throwable).

This means that these asynchronous events are not processed until the invocation of onContentSource(Request, Content.Source) returns, or until the invocation of the demand callback returns.

With this model, applications should not worry too much about concurrent asynchronous events happening during response content handling, because they will eventually see the events as failures while reading the response content.

Demanding for content and consuming the content are orthogonal activities.

An application can read, store aside the Content.Chunk objects without releasing them (to consume them later), and demand for more chunks, but it must call Chunk.retain() on the stored chunks, and arrange to release them after they have been consumed later.

If not done carefully, this may lead to excessive memory consumption, since the ByteBuffer bytes are not consumed.

Releasing the Content.Chunks will result in the ByteBuffers to be disposed/recycled and may be performed at any time.

An application can also read one chunk of content, consume it, release it, and then not demand for more content until a later time.

Subclass Response.AsyncContentListener overrides the behavior of Response.ContentSourceListener; when an application implements AsyncContentListener.onContent(response, chunk, demander), it can control the disposing/recycling of the ByteBuffer by releasing the chunk and it can control when to demand one more chunk by calling demander.run().

Subclass Response.ContentListener overrides the behavior of Response.AsyncContentListener; when an application implementing its onContent(response, buffer) returns from the method itself, it will both the effect of disposing/recycling the buffer and the effect of demanding one more chunk of content.

An application that implements a forwarder between two servers can be implemented efficiently by handling the response content without copying the ByteBuffer bytes as in the following example:

// Prepare a request to server1, the source.

Request request1 = httpClient.newRequest(host1, port1)

.path("/source");

// Prepare a request to server2, the sink.

AsyncRequestContent content2 = new AsyncRequestContent();

Request request2 = httpClient.newRequest(host2, port2)

.path("/sink")

.body(content2);

request1.onResponseContentSource(new Response.ContentSourceListener()

{

@Override

public void onContentSource(Response response, Content.Source contentSource)

{

// Only execute this method the very first time

// to initialize the request to server2.

request2.onRequestCommit(request ->

{

// Only when the request to server2 has been sent,

// then demand response content from server1.

contentSource.demand(() -> forwardContent(response, contentSource));

});

// Send the request to server2.

request2.send(result -> System.getLogger("forwarder").log(INFO, "Forwarding to server2 complete"));

}

private void forwardContent(Response response, Content.Source contentSource)

{

// Read one chunk of content.

Content.Chunk chunk = contentSource.read();

if (chunk == null)

{

// The read chunk is null, demand to be called back

// when the next one is ready to be read.

contentSource.demand(() -> forwardContent(response, contentSource));

// Once a demand is in progress, the content source must not be read

// nor demanded again until the demand callback is invoked.

return;

}

// Check if the chunk is last and empty, in which case the

// read/demand loop is done. Demanding again when the terminal

// chunk has been read will invoke the demand callback with

// the same terminal chunk, so this check must be present to

// avoid infinitely demanding and reading the terminal chunk.

if (chunk.isLast() && !chunk.hasRemaining())

{

chunk.release();

return;

}

// When a response chunk is received from server1, forward it to server2.

content2.write(chunk.getByteBuffer(), Callback.from(() ->

{

// When the request chunk is successfully sent to server2,

// release the chunk to recycle the buffer.

chunk.release();

// Then demand more response content from server1.

contentSource.demand(() -> forwardContent(response, contentSource));

}, x ->

{

chunk.release();

response.abort(x);

}));

}

});

// When the response content from server1 is complete,

// complete also the request content to server2.

request1.onResponseSuccess(response -> content2.close());

// Send the request to server1.

request1.send(result -> System.getLogger("forwarder").log(INFO, "Sourcing from server1 complete"));Request Transport

The communication between client and server happens over a low-level transport, and applications can specify the low-level transport to use for each request.

This gives client applications great flexibility, because they can use the same HttpClient instance to communicate, for example, with an external third party web application via TCP, to a different process via Unix-Domain sockets, and efficiently to the same process via memory.

Client application can also choose more esoteric configurations such as using QUIC, typically used to transport HTTP/3, to transport HTTP/1.1 or HTTP/2, because QUIC provides reliable and ordered communication like TCP does.

Provided you have configured a UnixDomainServerConnector on the server, this is how you can configure a request to use Unix-Domain sockets:

// This is the path where the server "listens" on.

Path unixDomainPath = Path.of("/path/to/server.sock");

// Creates a ClientConnector.

ClientConnector clientConnector = new ClientConnector();

// You can use Unix-Domain for HTTP/1.1.

HttpClientTransportOverHTTP http1Transport = new HttpClientTransportOverHTTP(clientConnector);

// You can use Unix-Domain also for HTTP/2.

HTTP2Client http2Client = new HTTP2Client(clientConnector);

HttpClientTransportOverHTTP2 http2Transport = new HttpClientTransportOverHTTP2(http2Client);

// You can use Unix-Domain also for the dynamic transport.

ClientConnectionFactory.Info http1 = HttpClientConnectionFactory.HTTP11;

ClientConnectionFactoryOverHTTP2.HTTP2 http2 = new ClientConnectionFactoryOverHTTP2.HTTP2(http2Client);

HttpClientTransportDynamic dynamicTransport = new HttpClientTransportDynamic(clientConnector, http1, http2);

// Choose the transport you prefer for HttpClient, for example the dynamic transport.

HttpClient httpClient = new HttpClient(dynamicTransport);

httpClient.start();

ContentResponse response = httpClient.newRequest("jetty.org", 80)

// Specify that the request must be sent over Unix-Domain.

.transport(new Transport.TCPUnix(unixDomainPath))

.send();In the same way, if you have configured a MemoryConnector on the server, this is how you can configure a request to use memory for communication:

// The server-side MemoryConnector speaking HTTP/1.1.

Server server = new Server();

MemoryConnector memoryConnector = new MemoryConnector(server, new HttpConnectionFactory());

server.addConnector(memoryConnector);

// ...

// The code above is the server-side.

// ----

// The code below is the client-side.

HttpClient httpClient = new HttpClient();

httpClient.start();

// Use the MemoryTransport to communicate with the server-side.

Transport transport = new MemoryTransport(memoryConnector);

httpClient.newRequest("http://localhost/")

// Specify the Transport to use.

.transport(transport)

.send();This is a fancy example of how to mix HTTP versions and low-level transports:

HttpClient httpClient = new HttpClient(new HttpClientTransportDynamic(clientConnector, http2, http1, http3));

httpClient.start();

// Make a TCP request to a 3rd party web application.

ContentResponse thirdPartyResponse = httpClient.newRequest("https://third-party.com/api")

// No need to specify the Transport, TCP will be used by default.

.send();

// Upload the third party response content to a validation process.

ContentResponse validatedResponse = httpClient.newRequest("http://localhost/validate")

// The validation process is available via Unix-Domain.

.transport(new Transport.TCPUnix(unixDomainPath))

.method(HttpMethod.POST)

.body(new BytesRequestContent(thirdPartyResponse.getContent()))

.send();

// Process the validated response intra-process by sending

// it to another web application in the same Jetty server.

ContentResponse response = httpClient.newRequest("http://localhost/process")

// The processing is in-memory.

.transport(new MemoryTransport(memoryConnector))

.method(HttpMethod.POST)

.body(new BytesRequestContent(validatedResponse.getContent()))

.send();HttpClient Configuration

HttpClient has a quite large number of configuration parameters.

Please refer to the HttpClient javadocs for the complete list of configurable parameters.

The most common parameters are:

-

HttpClient.idleTimeout: same asClientConnector.idleTimeoutdescribed in this section. -

HttpClient.connectBlocking: same asClientConnector.connectBlockingdescribed in this section. -

HttpClient.connectTimeout: same asClientConnector.connectTimeoutdescribed in this section. -

HttpClient.maxConnectionsPerDestination: the max number of TCP connections that are opened for a particular destination (defaults to 64). -

HttpClient.maxRequestsQueuedPerDestination: the max number of requests queued (defaults to 1024).

HttpClient TLS Configuration

HttpClient supports HTTPS requests out-of-the-box like a browser does.

The support for HTTPS request is provided by a SslContextFactory.Client instance, typically configured in the ClientConnector.

If not explicitly configured, the ClientConnector will allocate a default one when started.

SslContextFactory.Client sslContextFactory = new SslContextFactory.Client();

ClientConnector clientConnector = new ClientConnector();

clientConnector.setSslContextFactory(sslContextFactory);

HttpClient httpClient = new HttpClient(new HttpClientTransportDynamic(clientConnector));

httpClient.start();The default SslContextFactory.Client verifies the certificate sent by the server by verifying the validity of the certificate with respect to the certificate chain, the expiration date, the server host name, etc.

This means that requests to public websites that have a valid certificate (such as https://google.com) will work out-of-the-box, without the need to specify a KeyStore or a TrustStore.

However, requests made to sites that return an invalid or a self-signed certificate will fail (like they will in a browser). An invalid certificate may be expired or have the wrong server host name; a self-signed certificate has a certificate chain that cannot be verified.

The validation of the server host name present in the certificate is important, to guarantee that the client is connected indeed with the intended server.

The validation of the server host name is performed at two levels: at the TLS level (in the JDK) and, optionally, at the application level.

By default, the validation of the server host name at the TLS level is enabled, while it is disabled at the application level.

You can configure the SslContextFactory.Client to skip the validation of the server host name at the TLS level:

SslContextFactory.Client sslContextFactory = new SslContextFactory.Client();

// Disable the validation of the server host name at the TLS level.

sslContextFactory.setEndpointIdentificationAlgorithm(null);When you disable the validation of the server host name at the TLS level, you are strongly recommended to enable it at the application level. Failing to do so puts you at risk of connecting to a server different from the one you intend to connect to:

SslContextFactory.Client sslContextFactory = new SslContextFactory.Client();

// Only allow to connect to subdomains of domain.com.

sslContextFactory.setHostnameVerifier((hostName, session) -> hostName.endsWith(".domain.com"));Enabling server host name validation at both the TLS level and application level allow you to further restrict the set of server hosts the client can connect to, among those allowed in the certificate sent by the server.

Entirely disabling server host name validation is not recommended, but may be done in controlled environments.

Even with server host name validation disabled, the validation of the certificate chain, by validating cryptographic signatures and validity dates is still performed.

Please refer to the SslContextFactory.Client javadocs for the complete list of configurable parameters.

HttpClient SslHandshakeListener

Applications may register a org.eclipse.jetty.io.ssl.SslHandshakeListener to be notified of TLS handshakes success or failure, by adding the SslHandshakeListener as a bean to HttpClient:

// Create a SslHandshakeListener.

SslHandshakeListener listener = new SslHandshakeListener()

{

@Override

public void handshakeSucceeded(Event event) throws SSLException

{

SSLEngine sslEngine = event.getSSLEngine();

System.getLogger("tls").log(INFO, "TLS handshake successful to %s", sslEngine.getPeerHost());

}

@Override

public void handshakeFailed(Event event, Throwable failure)

{

SSLEngine sslEngine = event.getSSLEngine();

System.getLogger("tls").log(ERROR, "TLS handshake failure to %s", sslEngine.getPeerHost(), failure);

}

};

HttpClient httpClient = new HttpClient();

// Add the SslHandshakeListener as bean to HttpClient.

// The listener will be notified of TLS handshakes success and failure.

httpClient.addBean(listener);HttpClient Cookie Support

Jetty’s HttpClient supports cookies out of the box.

The HttpClient instance receives cookies from HTTP responses and stores them in a java.net.CookieStore, a class that is part of the JDK.

When new requests are made, the cookie store is consulted and if there are matching cookies (that is, cookies that are not expired and that match domain and path of the request) then they are added to the requests.

Applications can programmatically access the cookie store to find the cookies that have been set:

HttpCookieStore cookieStore = httpClient.getHttpCookieStore();

List<HttpCookie> cookies = cookieStore.match(URI.create("http://domain.com/path"));Applications can also programmatically set cookies as if they were returned from a HTTP response:

HttpCookieStore cookieStore = httpClient.getHttpCookieStore();

HttpCookie cookie = HttpCookie.build("foo", "bar")

.domain("domain.com")

.path("/")

.maxAge(TimeUnit.DAYS.toSeconds(1))

.build();

cookieStore.add(URI.create("http://domain.com"), cookie);Cookies may be added explicitly only for a particular request:

ContentResponse response = httpClient.newRequest("http://domain.com/path")

.cookie(HttpCookie.from("foo", "bar"))

.send();You can remove cookies that you do not want to be sent in future HTTP requests:

HttpCookieStore cookieStore = httpClient.getHttpCookieStore();

URI uri = URI.create("http://domain.com");

List<HttpCookie> cookies = cookieStore.match(uri);

for (HttpCookie cookie : cookies)

{

cookieStore.remove(uri, cookie);

}If you want to totally disable cookie handling, you can install a HttpCookieStore.Empty.

This must be done when HttpClient is used in a proxy application, in this way:

httpClient.setHttpCookieStore(new HttpCookieStore.Empty());You can enable cookie filtering by installing a cookie store that performs the filtering logic in this way:

class GoogleOnlyCookieStore extends HttpCookieStore.Default

{

@Override

public boolean add(URI uri, HttpCookie cookie)

{

if (uri.getHost().endsWith("google.com"))

return super.add(uri, cookie);

return false;

}

}

httpClient.setHttpCookieStore(new GoogleOnlyCookieStore());The example above will retain only cookies that come from the google.com domain or sub-domains.

Special Characters in Cookies

Jetty is compliant with RFC6265, and as such care must be taken when setting a cookie value that includes special characters such as ;.

Previously, Version=1 cookies defined in RFC2109 (and continued in RFC2965) allowed for special/reserved characters to be enclosed within double quotes when declared in a Set-Cookie response header:

Set-Cookie: foo="bar;baz";Version=1;Path="/secure"This was added to the HTTP Response as follows:

protected void service(HttpServletRequest request, HttpServletResponse response)

{

jakarta.servlet.http.Cookie cookie = new Cookie("foo", "bar;baz");

cookie.setPath("/secure");

response.addCookie(cookie);

}The introduction of RFC6265 has rendered this approach no longer possible; users are now required to encode cookie values that use these special characters.

This can be done utilizing jakarta.servlet.http.Cookie as follows:

jakarta.servlet.http.Cookie cookie = new Cookie("foo", URLEncoder.encode("bar;baz", "UTF-8"));Jetty validates all cookie names and values being added to the HttpServletResponse via the addCookie(Cookie) method.

If an illegal value is discovered Jetty will throw an IllegalArgumentException with the details.

HttpClient Authentication Support

Jetty’s HttpClient supports the BASIC and DIGEST authentication mechanisms defined by RFC 7235, as well as the SPNEGO authentication mechanism defined in RFC 4559.

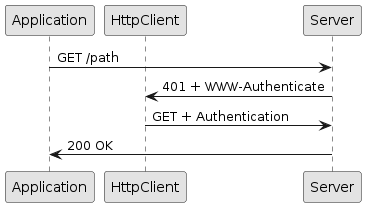

The HTTP conversation, the sequence of related HTTP requests, for a request that needs authentication is the following:

Upon receiving a HTTP 401 response code, HttpClient looks at the WWW-Authenticate response header (the server challenge) and then tries to match configured authentication credentials to produce an Authentication header that contains the authentication credentials to access the resource.

You can configure authentication credentials in the HttpClient instance as follows:

// Add authentication credentials.

AuthenticationStore auth = httpClient.getAuthenticationStore();

URI uri1 = new URI("http://mydomain.com/secure");

auth.addAuthentication(new BasicAuthentication(uri1, "MyRealm", "userName1", "password1"));

URI uri2 = new URI("http://otherdomain.com/admin");

auth.addAuthentication(new BasicAuthentication(uri1, "AdminRealm", "admin", "password"));Authentications are matched against the server challenge first by mechanism (e.g. BASIC or DIGEST), then by realm and then by URI.

If an Authentication match is found, the application does not receive events related to the HTTP 401 response.

These events are handled internally by HttpClient which produces another (internal) request similar to the original request but with an additional Authorization header.

If the authentication is successful, the server responds with a HTTP 200 and HttpClient caches the Authentication.Result so that subsequent requests for a matching URI will not incur in the additional rountrip caused by the HTTP 401 response.

It is possible to clear Authentication.Results in order to force authentication again:

httpClient.getAuthenticationStore().clearAuthenticationResults();Authentication results may be preempted to avoid the additional roundtrip due to the server challenge in this way:

AuthenticationStore auth = httpClient.getAuthenticationStore();

URI uri = URI.create("http://domain.com/secure");

auth.addAuthenticationResult(new BasicAuthentication.BasicResult(uri, "username", "password"));In this way, requests for the given URI are enriched immediately with the Authorization header, and the server should respond with HTTP 200 (and the resource content) rather than with the 401 and the challenge.

It is also possible to preempt the authentication for a single request only, in this way:

URI uri = URI.create("http://domain.com/secure");

Authentication.Result authn = new BasicAuthentication.BasicResult(uri, "username", "password");

Request request = httpClient.newRequest(uri);

authn.apply(request);

request.send();See also the proxy authentication section for further information about how authentication works with HTTP proxies.

HttpClient Proxy Support

Jetty’s HttpClient can be configured to use proxies to connect to destinations.

These types of proxies are available out of the box:

-

HTTP proxy (provided by class

org.eclipse.jetty.client.HttpProxy) -

SOCKS 4 proxy (provided by class

org.eclipse.jetty.client.Socks4Proxy) -

SOCKS 5 proxy (provided by class

org.eclipse.jetty.client.Socks5Proxy)

Other implementations may be written by subclassing ProxyConfiguration.Proxy.

The following is a typical configuration:

HttpProxy proxy = new HttpProxy("proxyHost", 8888);

// Do not proxy requests for localhost:8080.

proxy.getExcludedAddresses().add("localhost:8080");

// Add the new proxy to the list of proxies already registered.

ProxyConfiguration proxyConfig = httpClient.getProxyConfiguration();

proxyConfig.addProxy(proxy);

ContentResponse response = httpClient.GET("http://domain.com/path");You specify the proxy host and proxy port, and optionally also the addresses that you do not want to be proxied, and then add the proxy configuration on the ProxyConfiguration instance.

Configured in this way, HttpClient makes requests to the HTTP proxy (for plain-text HTTP requests) or establishes a tunnel via HTTP CONNECT (for encrypted HTTPS requests).

Proxying is supported for any version of the HTTP protocol.

The communication between the client and the proxy may be encrypted, so that it would not be possible for another party on the same network as the client to know what servers the client connects to.

SOCKS5 Proxy Support

SOCKS 5 (defined in RFC 1928) offers choices for authentication methods and supports IPv6 (things that SOCKS 4 does not support).

A typical SOCKS 5 proxy configuration with the username/password authentication method is the following:

Socks5Proxy proxy = new Socks5Proxy("proxyHost", 8888);

String socks5User = "jetty";

String socks5Pass = "secret";

var socks5AuthenticationFactory = new Socks5.UsernamePasswordAuthenticationFactory(socks5User, socks5Pass);

// Add the authentication method to the proxy.

proxy.putAuthenticationFactory(socks5AuthenticationFactory);

// Do not proxy requests for localhost:8080.

proxy.getExcludedAddresses().add("localhost:8080");

// Add the new proxy to the list of proxies already registered.

ProxyConfiguration proxyConfig = httpClient.getProxyConfiguration();

proxyConfig.addProxy(proxy);

ContentResponse response = httpClient.GET("http://domain.com/path");HTTP Proxy Authentication Support

Jetty’s HttpClient supports HTTP proxy authentication in the same way it supports server authentication.

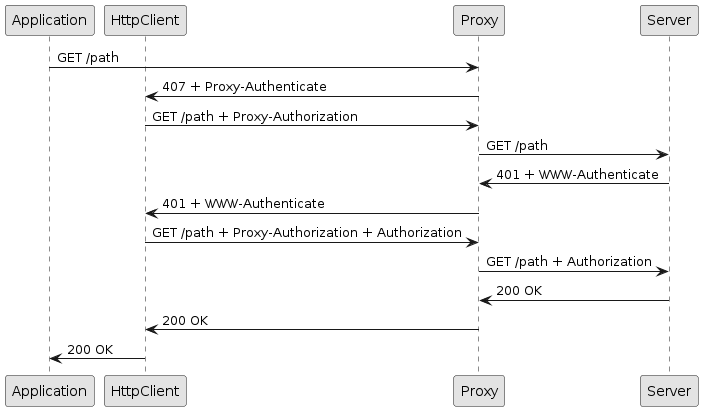

In the example below, the HTTP proxy requires BASIC authentication, but the server requires DIGEST authentication, and therefore:

AuthenticationStore auth = httpClient.getAuthenticationStore();

// Proxy credentials.

URI proxyURI = new URI("http://proxy.net:8080");

auth.addAuthentication(new BasicAuthentication(proxyURI, "ProxyRealm", "proxyUser", "proxyPass"));

// Server credentials.

URI serverURI = new URI("http://domain.com/secure");

auth.addAuthentication(new DigestAuthentication(serverURI, "ServerRealm", "serverUser", "serverPass"));

// Proxy configuration.

ProxyConfiguration proxyConfig = httpClient.getProxyConfiguration();

HttpProxy proxy = new HttpProxy("proxy.net", 8080);

proxyConfig.addProxy(proxy);

ContentResponse response = httpClient.newRequest(serverURI).send();The HTTP conversation for successful authentications on both the proxy and the server is the following:

The application does not receive events related to the responses with code 407 and 401 since they are handled internally by HttpClient.

Similarly to the authentication section, the proxy authentication result and the server authentication result can be preempted to avoid, respectively, the 407 and 401 roundtrips.

HttpClient Pluggable Transports

Jetty’s HttpClient can be configured to use different HTTP formats to carry the semantic of HTTP requests and responses, by specifying different HttpClientTransport implementations.

This means that the intention of a client to request resource /index.html using the GET method can be carried over a low-level transport in different formats.

An HttpClientTransport is the component that is in charge of converting a high-level, semantic, HTTP requests such as " GET resource /index.html " into the specific format understood by the server (for example, HTTP/2 or HTTP/3), and to convert the server response from the specific format (HTTP/2 or HTTP/3) into high-level, semantic objects that can be used by applications.

The most common protocol format is HTTP/1.1, a textual protocol with lines separated by \r\n:

GET /index.html HTTP/1.1\r\n

Host: domain.com\r\n

...

\r\nHowever, the same request can be made using FastCGI, a binary protocol:

x01 x01 x00 x01 x00 x08 x00 x00

x00 x01 x01 x00 x00 x00 x00 x00

x01 x04 x00 x01 xLL xLL x00 x00

x0C x0B D O C U M E

N T _ U R I / i

n d e x . h t m

l

...Similarly, HTTP/2 is a binary protocol that transports the same information in a yet different format via TCP, while HTTP/3 is a binary protocol that transports the same information in yet another format via QUIC.

The HTTP protocol version may be negotiated between client and server. A request for a resource may be sent using one protocol (for example, HTTP/1.1), but the response may arrive in a different protocol (for example, HTTP/2).

HttpClient supports these HttpClientTransport implementations, each speaking only one protocol:

HttpClient also supports HttpClientTransportDynamic, a dynamic transport that can speak different HTTP formats and can select the right protocol by negotiating it with the server or by explicit indication from applications.

Furthermore, every HTTP format can be sent over different low-level transports such as TCP, Unix-Domain, QUIC or memory. Supports for Unix-Domain sockets requires Java 16 or later, since Unix-Domain sockets support has been introduced in OpenJDK with JEP 380.

Applications are typically not aware of the actual HTTP format or low-level transport being used. This allows them to write their logic against a high-level API that hides the details of the specific HTTP format and low-level transport being used.

HTTP/1.1 Transport

HTTP/1.1 is the default transport.

// No transport specified, using default.

HttpClient httpClient = new HttpClient();

httpClient.start();If you want to customize the HTTP/1.1 transport, you can explicitly configure it in this way:

// Configure HTTP/1.1 transport.

HttpClientTransportOverHTTP transport = new HttpClientTransportOverHTTP();

transport.setHeaderCacheSize(16384);

HttpClient client = new HttpClient(transport);

client.start();HTTP/2 Transport

The HTTP/2 transport can be configured in this way:

// The HTTP2Client powers the HTTP/2 transport.

HTTP2Client http2Client = new HTTP2Client();

http2Client.setInitialSessionRecvWindow(64 * 1024 * 1024);

// Create and configure the HTTP/2 transport.

HttpClientTransportOverHTTP2 transport = new HttpClientTransportOverHTTP2(http2Client);

transport.setUseALPN(true);

HttpClient client = new HttpClient(transport);